Understanding LLMjacking: A Cloud Security Threat You Need to Know

LLMCYBERSECURITY

AI Generated Text, Video by Jeff Crume, IBM Engineer

4/9/20252 min read

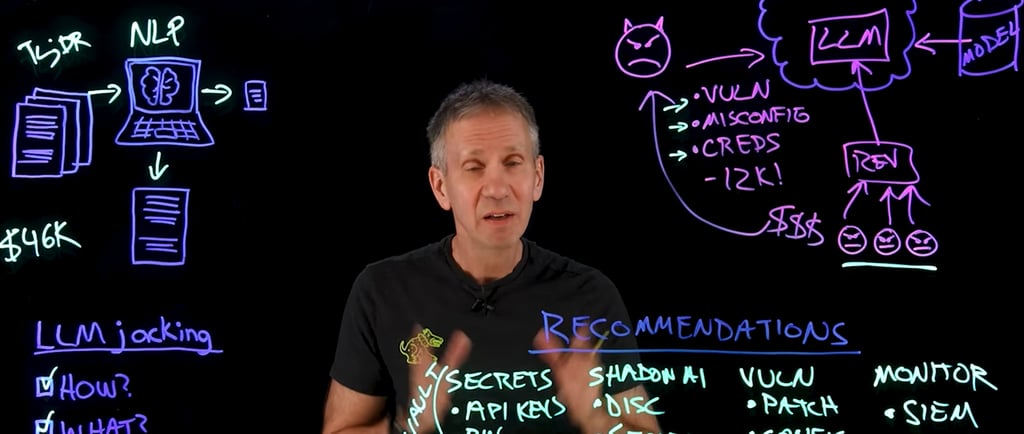

What is LLMjacking?

In the ever-evolving world of artificial intelligence, one term that's been making waves is LLMjacking. It's a sneaky and costly attack that taps into the vulnerabilities found in cloud systems and the increasing use of shadow AI. But what does it mean for businesses and individuals? In essence, LLMjacking involves hijacking AI models to exploit their capabilities, often leading to significant financial and resource drain.

The Mechanics of LLMjacking

Understanding how LLMjacking works is crucial for anyone who uses cloud services. Attackers typically target large language models by exploiting weaknesses in the cloud environment. These models are designed to process language and generate responses. However, malicious actors can manipulate them to execute harmful commands or access sensitive information.

This type of attack usually relies on two primary elements: the vulnerabilities present in cloud infrastructure and the presence of shadow AI. Shadow AI refers to the use of AI tools and applications that aren't sanctioned by an organization. Attackers leverage these unauthorized models to bypass security measures, leading to potential breaches and misuse of cloud resources.

How to Spot Vulnerabilities and Secure Your Cloud Systems

So, how can you protect yourself from the lurking dangers of LLMjacking? First things first, it’s essential to conduct a comprehensive audit of your cloud environment. Identify any unauthorized applications or AI tools that might pose a risk. Regularly updating your software and keeping up with the latest security patches can significantly mitigate vulnerabilities.

Furthermore, it’s crucial to establish robust security protocols. Implement multi-factor authentication, and ensure that only trusted users have access to sensitive data and AI models. Educate your employees about the potential dangers of shadow AI and the signs to watch for that could indicate a breach.

In conclusion, LLMjacking is a hidden cloud security threat that can wreak havoc if left unchecked. By understanding what it is, how it works, and taking proactive steps to secure your cloud systems, you can help protect your resources and sensitive information. Stay informed, stay vigilant, and you can combat the risks associated with this modern AI hijacking technique.

For more detailed and specialized insights, watch Jeff Crume's video.

Insights

Exploring AI's impact on people, society, and the environment.

Updates

Trends

ibakopoulos@aisociety.gr

Send email to...

This work is licensed under Creative Commons Attribution 4.0 International